UpdraftPlus Home › Forums › Paid support forum – UpdraftPlus backup plugin › It could not upload it all!

Tagged: Takes very long time to upload.

- This topic has 14 replies, 2 voices, and was last updated 10 years ago by udadmin.

-

AuthorPosts

-

December 20, 2014 at 9:39 am #70585RaymondParticipant

Wow it was taking a long time and both cores on my server was going 100% for hours! It’s super slow to upload. I guess because it’s using apache.

The backup has 6 big data .zip files. It uploaded not all the 1st one stop at about 1.5 GB. It’s should’ve been about 2 GB zip.

On both my server I can use samba. As I typed this I drag and drop the 2 GB to the same place from updraft where it should go. It only took about 3 minutes to upload it like that!

Can only guess it error uploading with UpdraftPlus because it took so long and the hard drive went to sleep. Not sure.

Can see it only takes about 16 minutes to upload the rest of the big backup .zip files in that photo.

Guess with UpdraftPlus it would of been more then 24 hours with both cores of the CPU going 100%

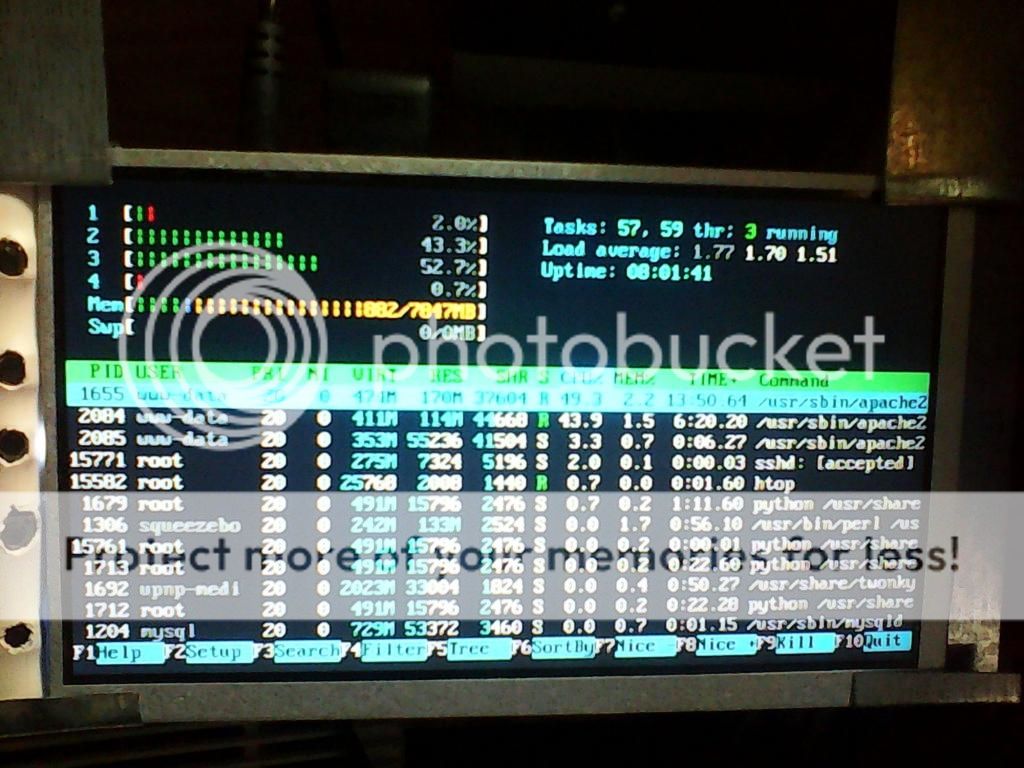

Got a little LCD screen on my server and got this photo of htop when updraftplus was uploading.

Not sure if there is a better faster way to upload with in WordPress. That don’t take a lot of CPU power and time.

-Raymond Day

December 20, 2014 at 11:50 am #70622udadminKeymasterHi Ray,

Please can you post the backup log file? (Find it on the “Existing Backups” tab – each backup has a log button). Use pastebin.com or something similar to post it, as it’ll be too long for this forum.

I would imagine that UD hadn’t actually reached the uploading stage yet – because, uploading does not use any significant CPU resources. It’s the creating of the zips that uses CPU and disk I/O, as files are read, written and compressed (which is unavoidable with a zip-based backup – see: https://updraftplus.com/faqs/how-much-resources-does-updraftplus-need/ ).

Best wishes,

DavidDecember 20, 2014 at 11:52 am #70625udadminKeymasterP.S. I notice from the screenshot that you’ve put the ‘split’ size up to 2Gb. The link in my previous post explains why this will very significantly increase CPU and disk I/O usage.

Best wishes,

DavidDecember 22, 2014 at 11:10 am #71438RaymondParticipantGuess last night it was trying to back up. Looks like it did delete the older backup and when it started to upload the new big .zip file it just stop that’s the end line in the log file.

Here is the end of the log file about 20 lines of the end.

7980.751 (9) UpdraftPlus WordPress backup plugin (https://updraftplus.com): 1.9.45.19 WP: 4.1 PHP: 5.5.9-1ubuntu4.5 (Linux ubuntu-i3 3.13.0-39-generic #66-Ubuntu SMP Tue Oct 28 13:30:27 UTC 2014 x86_64) MySQL: Server: Apache/2.4.7 (Ubuntu) safe_mode: 0 max_execution_time: 900 memory_limit: 256M (used: 2.7M | 3M) multisite: N mcrypt: N LANG: C ZipArchive::addFile: Y

7980.751 (9) Free space on disk containing Updraft’s temporary directory: 1282574.5 Mb

7980.752 (9) Backup run: resumption=9, nonce=489757871857, begun at=1419177603 (7980s ago), job type=backup

7980.752 (9) The current run is resumption number 9, and there was nothing useful done on the last run (last useful run: 0) – will not schedule a further attempt until we see something useful happening this time

7980.753 (9) Creation of backups of directories: already finished

7980.753 (9) Saving backup status to database (elements: 14)

7980.754 (9) Database dump (WordPress DB): Creation was completed already

7980.754 (9) Saving backup history

7980.756 (9) backup_2014-12-21-1100_my_weblog_489757871857-plugins.zip: plugins: This file has already been successfully uploaded

7980.756 (9) backup_2014-12-21-1100_my_weblog_489757871857-themes.zip: themes: This file has already been successfully uploaded

7980.756 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.757 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads2.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.757 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads3.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.757 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads4.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.757 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads5.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.758 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads6.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.758 (9) backup_2014-12-21-1100_my_weblog_489757871857-uploads7.zip: uploads: This file has not yet been successfully uploaded: will queue

7980.758 (9) backup_2014-12-21-1100_my_weblog_489757871857-others.zip: others: This file has not yet been successfully uploaded: will queue

7980.758 (9) backup_2014-12-21-1100_my_weblog_489757871857-db.gz: db: This file has not yet been successfully uploaded: will queue

7980.759 (9) Requesting upload of the files that have not yet been successfully uploaded (9)

7980.760 (9) Cloud backup selection: sftp

7980.760 (9) Beginning dispatch of backup to remote (sftp)

7982.054 (9) SFTP: Successfully logged in

7982.054 (9) SCP/SFTP upload: attempt: backup_2014-12-21-1100_my_weblog_489757871857-uploads.zipGuess that my be the best part of the long need to see.

The Existing Backups tab under the top Backup date says:

Dec 21, 2014 11:00

(Not finished)-Raymond Day

December 22, 2014 at 5:26 pm #71548udadminKeymasterHi Ray,

The SFTP file storage method doesn’t use chunked/resumable uploads (because these weren’t available in the PHP SFTP library). As a result, the PHP timeout on your webserver has to be high enough to upload 100% of the zip archive before the timeout hits.

You can work around this by any of these:

a) increasing the PHP timeout

or

b) reducing the ‘split’ size in the expert section at the bottom of the ‘Settings’ tab, to produce smaller zip files

or

c) switch to a remote storage method that supports chunked/resumable uploading: these include: FTP, FTPS, S3, Dropbox, Rackspace, Google Drive, Copy.Com.Best wishes,

DavidDecember 25, 2014 at 5:20 pm #72756RaymondParticipantDon’t get why it all ways stop uploading on the 1ST backup .zip file and it takes hours and a lot of CPU time to do it.

I just heard my CPU fan going a lot on my server. So I did htop to see why. It was updraftplus doing it’s backup.

Got this photo to show that.

I got this script that can back up my WordPress very fast. In like 3 minutes make the backup of my database and and upload folder to my WordPress. The upload folder has a lot of photos and videos in it so it’s big.

Here is the script and I bet others would like it.

#!/bin/bash #Variables name_base=<code>date +"%Y-%m-%d"</code> #define name base for archive off of date backupdir="/media/USBdisk2-3TB/home/part2/mystuff/WordPress_Backup" contentdir="/usr/share/wordpress/wp-content/uploads/" #Start backup tar cvf WordPress_Data-$name_base.tar $contentdir mysqldump -u root --password=########## --databases wordpress > $backupdir/WordPress_Data-$name_base.sql #Create tar file #Add mysql dump to archive tar -rvf $backupdir/WordPress_Data-$name_base.tar $backupdir/WordPress_Data-$name_base.sql #Cleanup rm $backupdir/WordPress_Data-$name_base.sqlAt the top can just change that for other servers and it will work.

I just need to make it so it saves to my other LAN sever that will be easy.

Can log in to it with out having a password in the script because I did a long key on it to both just easy 2 commands like this:

ssh-keygen -t rsa cat /root/.ssh/id_rsa.pub | ssh [email protected] "mkdir -p ~/.ssh && cat >> /root/.ssh/authorized_keys"Have to change that a little too like the IP for others.

Then have to get it to only save like 2 or so backups and delete the old backups.

Seems like this is a better faster way to do a backup of WordPress.

I guess in a few days my have that script so it does the 2 other things I said. Seems a lot better then updraftplus.

Can just have a cron job run this script every 24 hours.

-Raymond Day

December 26, 2014 at 12:46 am #72881RaymondParticipantI put the post here because it would not let me edit it but found out can edit the URL to the post number to edit it. But don’t look like can delete this post.

-Raymond Day

December 26, 2014 at 1:49 am #72898RaymondParticipantHere is what the log allway says at the end:

17556.216 (9) Saving backup history 17556.218 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-plugins.zip: plugins: This file has already been successfully uploaded 17556.219 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-themes.zip: themes: This file has already been successfully uploaded 17556.219 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.219 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads2.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.219 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads3.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.220 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads4.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.220 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads5.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.220 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads6.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.220 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads7.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.220 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads8.zip: uploads: This file has not yet been successfully uploaded: will queue 17556.221 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-others.zip: others: This file has not yet been successfully uploaded: will queue 17556.221 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-others2.zip: others: This file has not yet been successfully uploaded: will queue 17556.221 (9) backup_2014-12-25-1101_my_weblog_915fece0ab3b-db.gz: db: This file has not yet been successfully uploaded: will queue 17556.222 (9) Requesting upload of the files that have not yet been successfully uploaded (11) 17556.222 (9) Cloud backup selection: sftp 17556.223 (9) Beginning dispatch of backup to remote (sftp) 17573.830 (9) SFTP: Successfully logged in 17573.831 (9) SCP/SFTP upload: attempt: backup_2014-12-25-1101_my_weblog_915fece0ab3b-uploads.zipIt all way uploads the 1st 2 files but the 1st .zip big file it only uploads it about 1/3 of it all the time.

-Raymond Day

December 26, 2014 at 10:40 am #73042RaymondParticipantJust edit my /etc/php5/apache2/php.ini file.

upload_max_filesize = 256Mand:

max_execution_time = 280The max time was set at 30.

Then restatered it with:

service apache2 restartTime will tell if this works.

My upload is set to:

SFTP / SCPSo it must be using sftp but maybe scp and that’s why it don’t work. Not sure.

-Raymond Day

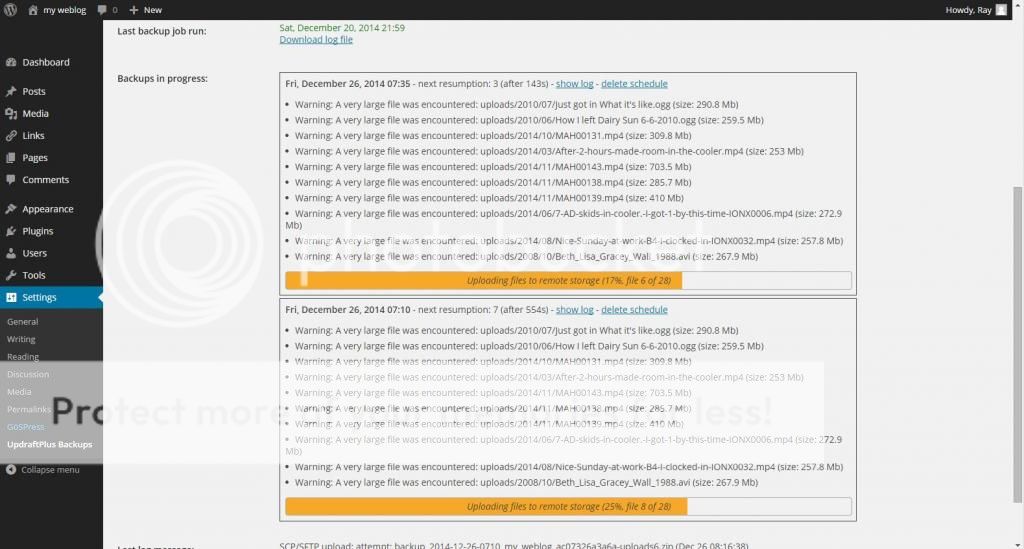

December 26, 2014 at 11:56 am #73064RaymondParticipantStill not working. It always gets stuck at:

Uploading files to remote storage (18%, file 3 of 11)

This shows too:

Fri, December 26, 2014 06:11 – next resumption: 3 (after 629s) –

I guess it gives up after 10 resumptions.

I will see if I can get it to save 100’s of small files as one big backup after it stops this backup.

It is using sftp too because in the log I seen this:

Beginning dispatch of backup to remote (sftp) so it should do big chunks of .zip file backups.

-Raymond Day

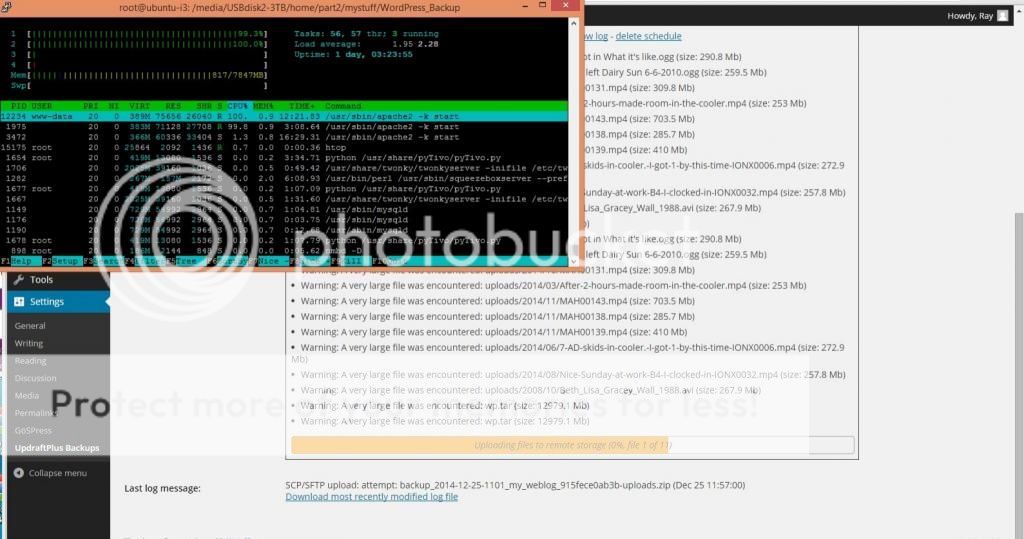

December 26, 2014 at 1:24 pm #73088RaymondParticipantI set the upload chunk form 2000 to 500 and now looks like it’s working!

Clicked to start the backup and looks like it auto started a new one. Looks like it will finish this time.

I guess I could put the size to 1500 because that’s seems when it errored on the big .zip files. But to be on the save side I guess 1300 by be good.

Just don’t like it that it has to put a backup’s in a lot of files if it could just be one file.

All so I put my big key in the log in. So it don’t show my root password on there.

The key is stored at:

/root/.ssh/id_rsa file.

I removed the Begin and end RSA Private key lines.

-Raymond Day

December 27, 2014 at 2:12 pm #73533udadminKeymasterHi Ray,

I’ve emailed you a new version of UpdraftPlus which adds support for chunking/resuming for SFTP. Please give it a try, and let me know how you get on.

David

December 28, 2014 at 3:09 am #73749RaymondParticipantI installed the e-mail one and unzip it over the the I had. Pressed Yes for all.

root@ubuntu-i3:/usr/share/wordpress/wp-content/plugins# unzip updraftplus.trunk.zip Archive: updraftplus.trunk.zip replace updraftplus/readme.txt? [y]es, [n]o, [A]ll, [N]one, [r]ename: AThen ran it but it still get’s errors.

Here is what WordPress says:

Sat, December 27, 2014 20:28 - next resumption: 4 (after 818s) - show log - delete schedule Warning: A very large file was encountered: uploads/2010/07/Just got in What it's like.ogg (size: 290.8 Mb) Warning: A very large file was encountered: uploads/2010/06/How I left Dairy Sun 6-6-2010.ogg (size: 259.5 Mb) Warning: A very large file was encountered: uploads/2014/10/MAH00131.mp4 (size: 309.8 Mb) Warning: A very large file was encountered: uploads/2014/03/After-2-hours-made-room-in-the-cooler.mp4 (size: 253 Mb) Warning: A very large file was encountered: uploads/2014/11/MAH00143.mp4 (size: 703.5 Mb) Warning: A very large file was encountered: uploads/2014/11/MAH00138.mp4 (size: 285.7 Mb) Warning: A very large file was encountered: uploads/2014/11/MAH00139.mp4 (size: 410 Mb) Warning: A very large file was encountered: uploads/2014/06/7-AD-skids-in-cooler.-I-got-1-by-this-time-IONX0006.mp4 (size: 272.9 Mb) Warning: A very large file was encountered: uploads/2014/08/Nice-Sunday-at-work-B4-I-clocked-in-IONX0032.mp4 (size: 257.8 Mb) Warning: A very large file was encountered: uploads/2008/10/Beth_Lisa_Gracey_Wall_1988.avi (size: 267.9 Mb) Waiting until scheduled time to retry because of errorsCan see it’s it’s forth try. So I am sure it will try and try and then give up.

-Raymond Day

December 28, 2014 at 11:45 am #73888RaymondParticipantLooks like it auto worked this time!

Though the night it was working looks like. It takes hours to upload it. I guess about 8 or so but looks like it worked.

Here is the ending of the log for it.

35901.376 (14) Recording as successfully uploaded: backup_2014-12-27-2028_my_weblog_36b445fb207e-uploads24.zip (aa0eb3f7de7c74ebf314ddf4530aec72) 35901.452 (14) Deleting local file: backup_2014-12-27-2028_my_weblog_36b445fb207e-uploads24.zip: OK 35901.452 (14) SCP/SFTP upload: attempt: backup_2014-12-27-2028_my_weblog_36b445fb207e-others.zip 35902.025 (14) Sftp chunked upload: 11.7 % uploaded 35902.594 (14) Sftp chunked upload: 23.5 % uploaded 35903.858 (14) Sftp chunked upload: 35.2 % uploaded 35904.441 (14) Sftp chunked upload: 47 % uploaded 35905.015 (14) Sftp chunked upload: 58.7 % uploaded 35905.581 (14) Sftp chunked upload: 70.5 % uploaded 35906.152 (14) Sftp chunked upload: 82.2 % uploaded 35906.719 (14) Sftp chunked upload: 93.9 % uploaded 35907.017 (14) Recording as successfully uploaded: backup_2014-12-27-2028_my_weblog_36b445fb207e-others.zip (204abae3f9fc541233e77f9140369e41) 35907.020 (14) Deleting local file: backup_2014-12-27-2028_my_weblog_36b445fb207e-others.zip: OK 35907.021 (14) SCP/SFTP upload: attempt: backup_2014-12-27-2028_my_weblog_36b445fb207e-db.gz 35907.596 (14) Sftp chunked upload: 12.6 % uploaded 35908.169 (14) Sftp chunked upload: 25.1 % uploaded 35908.741 (14) Sftp chunked upload: 37.7 % uploaded 35909.314 (14) Sftp chunked upload: 50.2 % uploaded 35909.903 (14) Sftp chunked upload: 62.8 % uploaded 35910.477 (14) Sftp chunked upload: 75.3 % uploaded 35911.053 (14) Sftp chunked upload: 87.9 % uploaded 35911.608 (14) Recording as successfully uploaded: backup_2014-12-27-2028_my_weblog_36b445fb207e-db.gz (3b9668a4dda24b1552b63ffbaf319d10) 35911.611 (14) Deleting local file: backup_2014-12-27-2028_my_weblog_36b445fb207e-db.gz: OK 35911.613 (14) Retain: beginning examination of existing backup sets; user setting: retain_files=2, retain_db=2 35911.613 (14) Number of backup sets in history: 2 35911.614 (14) Examining backup set with datestamp: 1419730117 (Dec 28 2014 01:28:37) 35911.614 (14) 1419730117: db: this set includes a database (backup_2014-12-27-2028_my_weblog_36b445fb207e-db.gz); db count is now 1 35911.615 (14) 1419730117: this backup set remains non-empty (1/1); will retain in history 35911.616 (14) Examining backup set with datestamp: 1419697005 (Dec 27 2014 16:16:45) 35911.616 (14) 1419697005: db: this set includes a database (backup_2014-12-27-1116_my_weblog_63a743f446f4-db.gz); db count is now 2 35911.618 (14) 1419697005: this backup set remains non-empty (1/1); will retain in history 35911.618 (14) Retain: saving new backup history (sets now: 2) and finishing retain operation 35911.619 (14) Resume backup (36b445fb207e, 14): finish run 35911.620 (14) There were no errors in the uploads, so the 'resume' event (15) is being unscheduled 35911.622 (14) No email will/can be sent - the user has not configured an email address. 35911.623 (14) The backup apparently succeeded (with warnings) and is now completeUploaded all 24.zip backups this time.

I used the one you e-mailed me. Seems like it works.

-Raymond Day

December 29, 2014 at 9:32 am #74262udadminKeymasterHi Ray,

Great – glad it worked for you!

Waiting until scheduled time to retry because of errors

When you see this, it’s best to wait until the job gives a final finishing state. UpdraftPlus has various re-try strategies, to try to overcome temporary conditions.

Best wishes,

David -

AuthorPosts

- The topic ‘It could not upload it all!’ is closed to new replies.